Magical (and safe) images for curious children

Approaching AI image generators for children’s apps

I just released a massive investment in Wanderly’s image system and am extremely excited about it. For the first time, I have characters present in stories, and I’ve been able to reduce mismatches between story text and image. It also just feels cuter. 🥰

I’ve wanted to write a post about how I think about AI images + children’s stories for a while, and this felt like the right moment. So here goes!

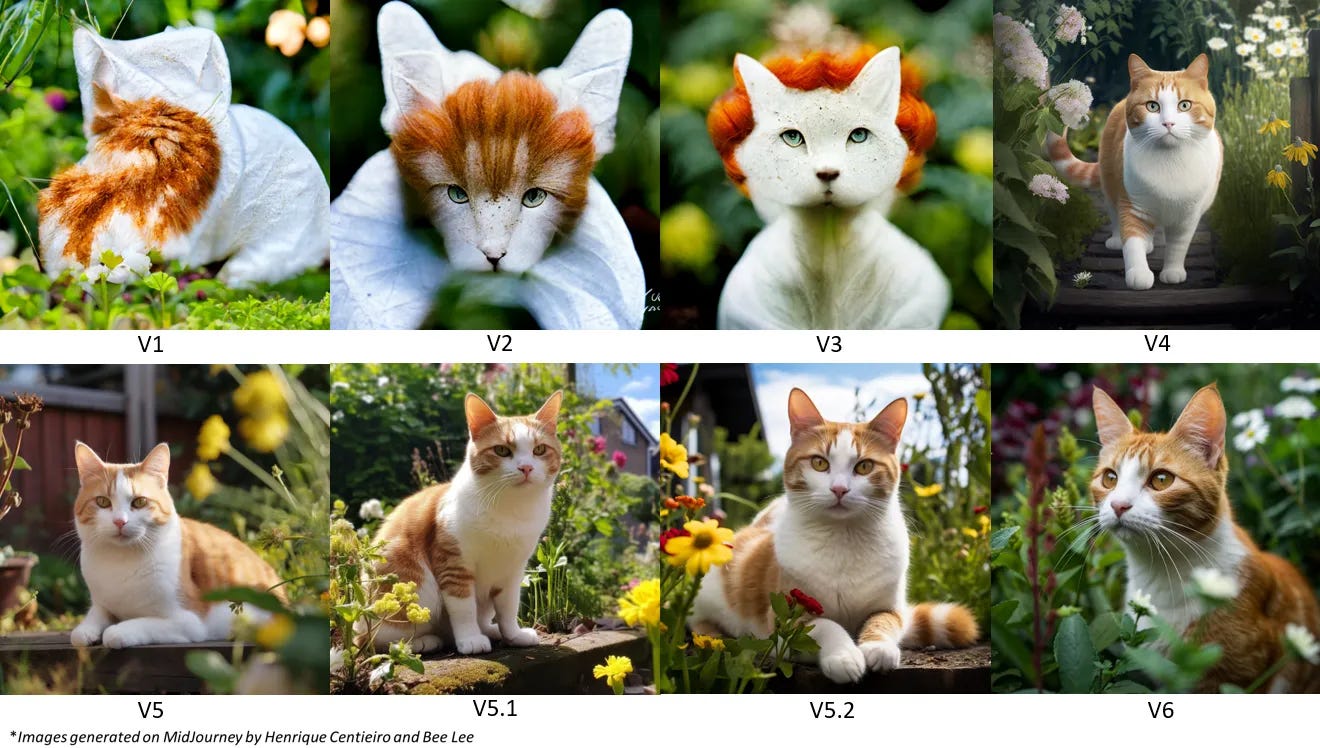

AI image generation has come a LONG way, even in the last year. Here’s how Midjourney has progressed in ~2 years:

So, what does this rapid progression mean for Wanderly? My goal with building Wanderly has always been to create a child-led, open-ended space for children to learn and explore with their imagination. AI technology is a tool that enables this type of product in a way that’s never been possible. But that doesn’t mean I will try to shove AI into as many places as possible.

I’ve wrestled a lot with how to incorporate AI images in Wanderly best: on one hand, it’s the first time we’ve ever been able to generate an on-the-fly image in 30 seconds or less using only words. On the other hand… I don’t completely trust AI image generators yet. At least not enough to go unsupervised with anyone’s kids. For this post, I will explain why I don’t trust AI image generators, what I think AI image generators are great at, and how I’m using (and not using them) for Wanderly.

Why I don’t trust AI image generators with children (yet)

When I say I don’t trust AI image generators with children, I mean a couple of specific things:

I have a few values that I think are important about images for kids. In essence, they boil down to “do no harm”:

It is important that Wanderly be beautiful, but it's even more important to be safe. I believe this from a brand-risk perspective, but I also believe that knowingly exposing children to an unlikely but unacceptable outcome isn’t responsible.

While these principles are an extension of my values, my experience with AI image generators made me leery of unsupervised AI image generation.

When Midjourney v5 came out (March ‘23), I was just starting to add images to my Wanderly prototype. I vividly remember two experiences around image safety:

Generating images of “Venus” (the planet) and 50%+ of the images included a nude woman

Generating images of a friendly alien and finding myself with 3 images of an alien and 1 image of a dark-skinned girl. 😱

In both these cases, if these images had shown up in Wanderly (which I’m very glad they didn’t), it could have caused harm to the child reading the story and probably made the parent pretty angry.

When I encountered these examples, I decided not to dynamically generate AI images with Wanderly stories (even though my competitors are). Instead, I launched Wanderly with images generated offline, matched to a page based on keywords. That means every image on Wanderly is parent-reviewed, which keeps the image quality high. In fact, several users have said Wanderly images are their favorite feature, and I’ve even received print requests.

To Midjourney and DALLE’s credit, they’ve gotten a lot better (i.e., more “steerable”) in the last year. There’s also less body horror (i.e., most body parts are where you’d expect). When DALLE-3 came out last year, I even had a moment of existential crisis. Was I being too conservative? So I ran a few tests. It was much better, and the magic of dynamic images sorely tempted me, but there were a couple of things that kept me hesitating:

Multi-character prompts often result in chimeras (i.e., two characters blended into one hybrid character), and a key piece of Wanderly is the companions in your story

Most image generators default to white characters (except for the hot minute when Gemini’s image models broke the internet).

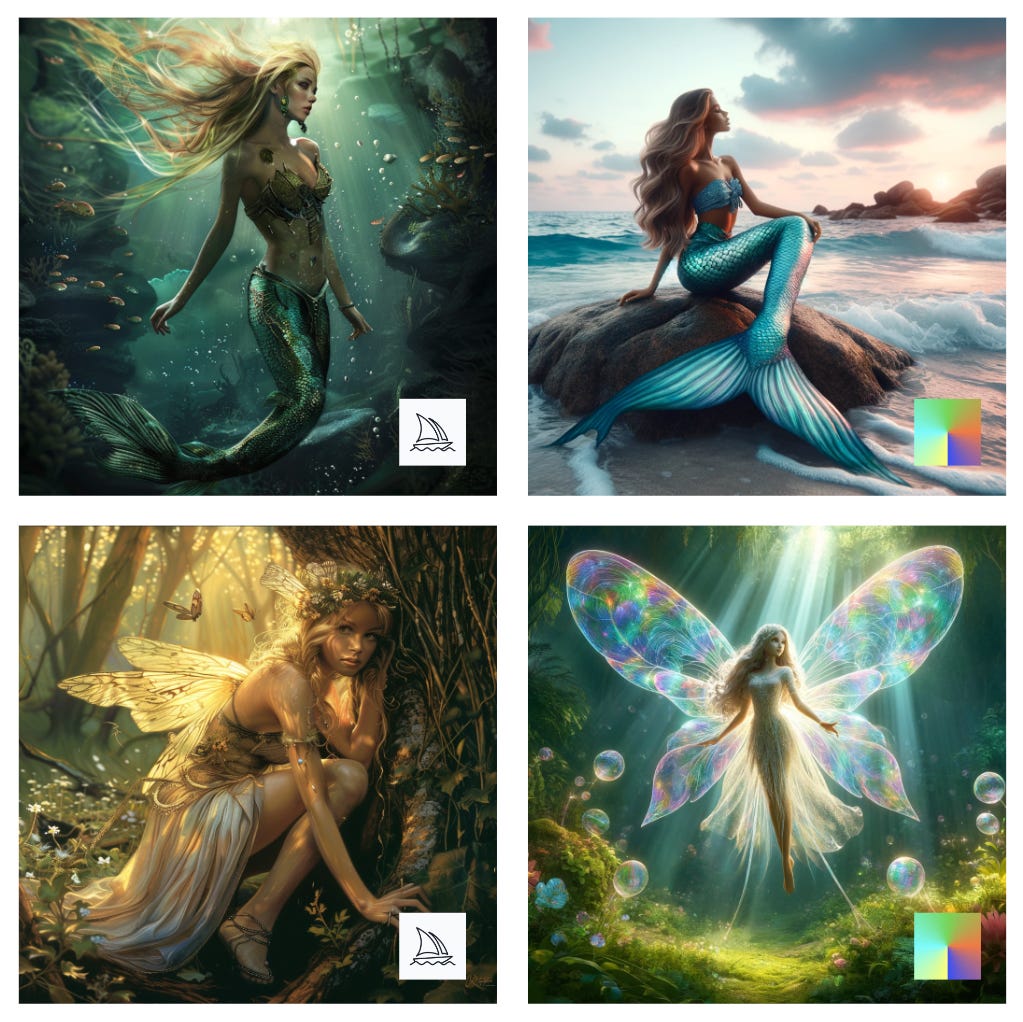

Some key children’s characters are oversexualized, like fairies2 and mermaids.

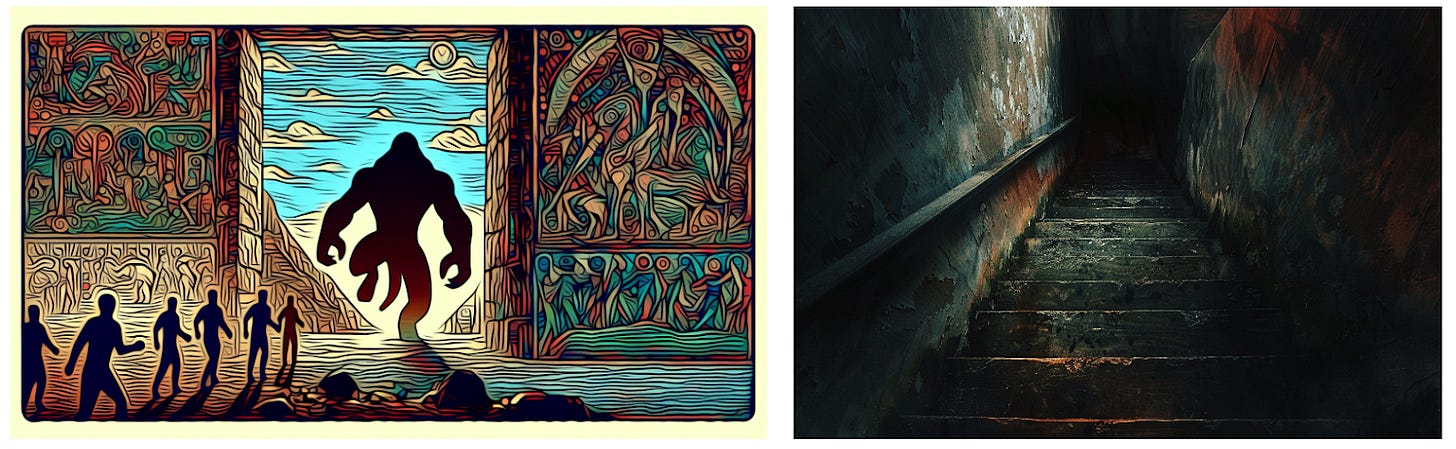

Beyond safety, I think a lot about product experience. I did a bunch of experiments where my story engine generated image prompts, which I passed to the DALLE-3 API. The images were interesting and often pretty, but covering all my bases with prompt engineering was hard. It was hard to control consistency, the AI generator often added things that weren’t asked for (like extra characters) and it was hard to control subjective things like “too scary”. With this approach, there was a risk of a young child getting confused and amped up before bedtime… and all parents know that’s not a recipe for product market fit.

What AI Image generators are great at

But AI image generators do have their uses. Almost every image in Wanderly is AI-generated… but they’re also parent-reviewed (shout out to my husband / Wanderly Art Director, who does a lot of the image generation). We also spent a lot of time aiming for quality (Midjourney for most images, since it’s much better than DALLE-3 on consistency; i.e., once you find an aesthetic you like, it’s easier to replicate).

In my opinion, AI image generators are great at cheaply creating beautiful images. If I wanted to build something like Wanderly without AI image generation, I’d need a massive budget for buying or commissioning art. There’d be no way I’d be able to build this business. I use AI-generated images for the in-app art, but I also use AI images for backgrounds for ads, stock photography, email headers, and the Wanderly logo3. I’m not opposed to using human-generated images either; in fact, I commissioned an artist for all of the Webster the Spider illustrations since I wanted something very specific.

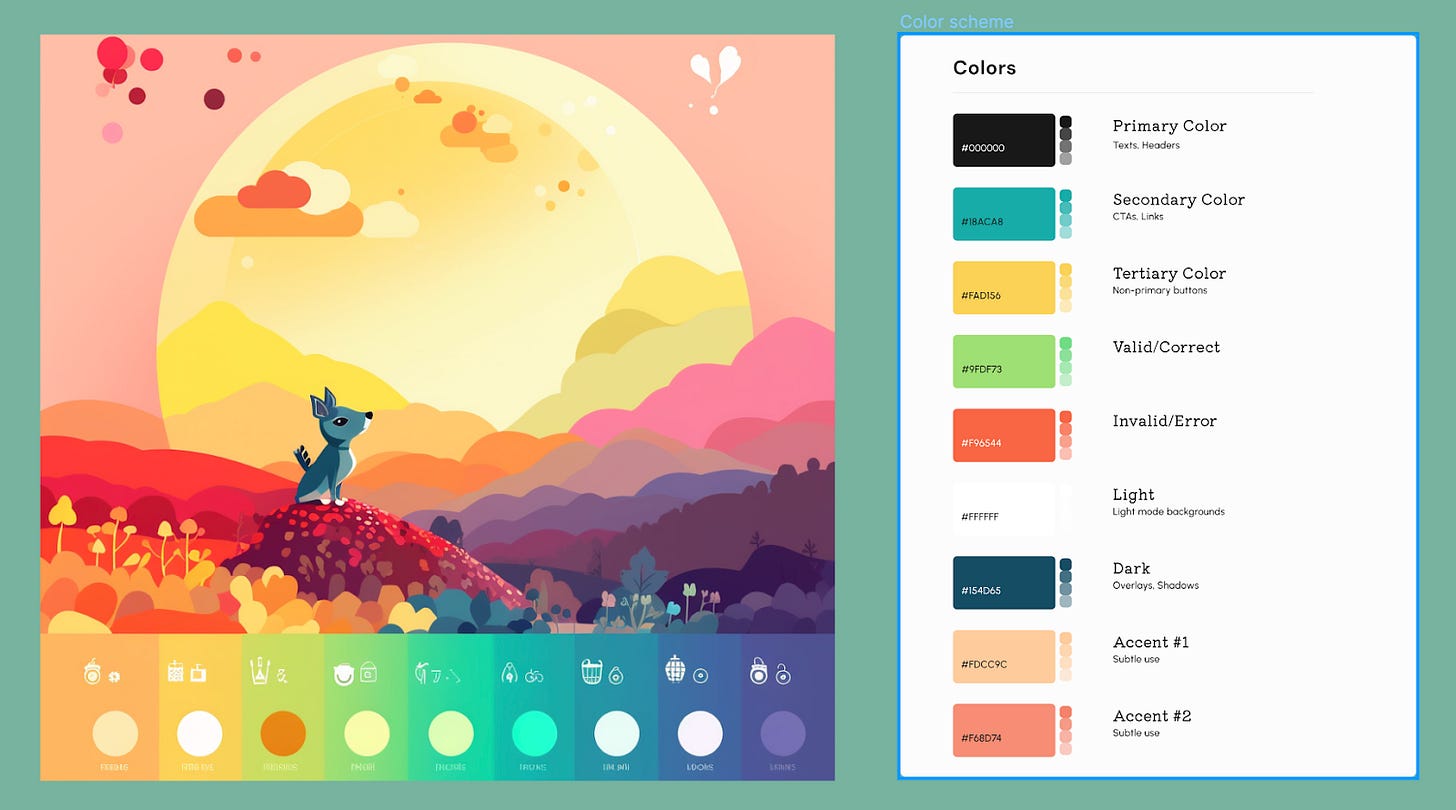

I also like using AI image generators for inspiration. I used Midjourney to brainstorm Wanderly’s colors: I asked for color palettes for a kid's app, mined images for ones I liked, and then extrapolated my app colors from the image into my Figma mocks. I also like using AI image generators to mock up new features. In a typical Google design cycle, we’d do wireframes before getting illustrations. With AI image generators, ideating mocks with high-fidelity image assets is cheap and fast.

AI Images & Wanderly

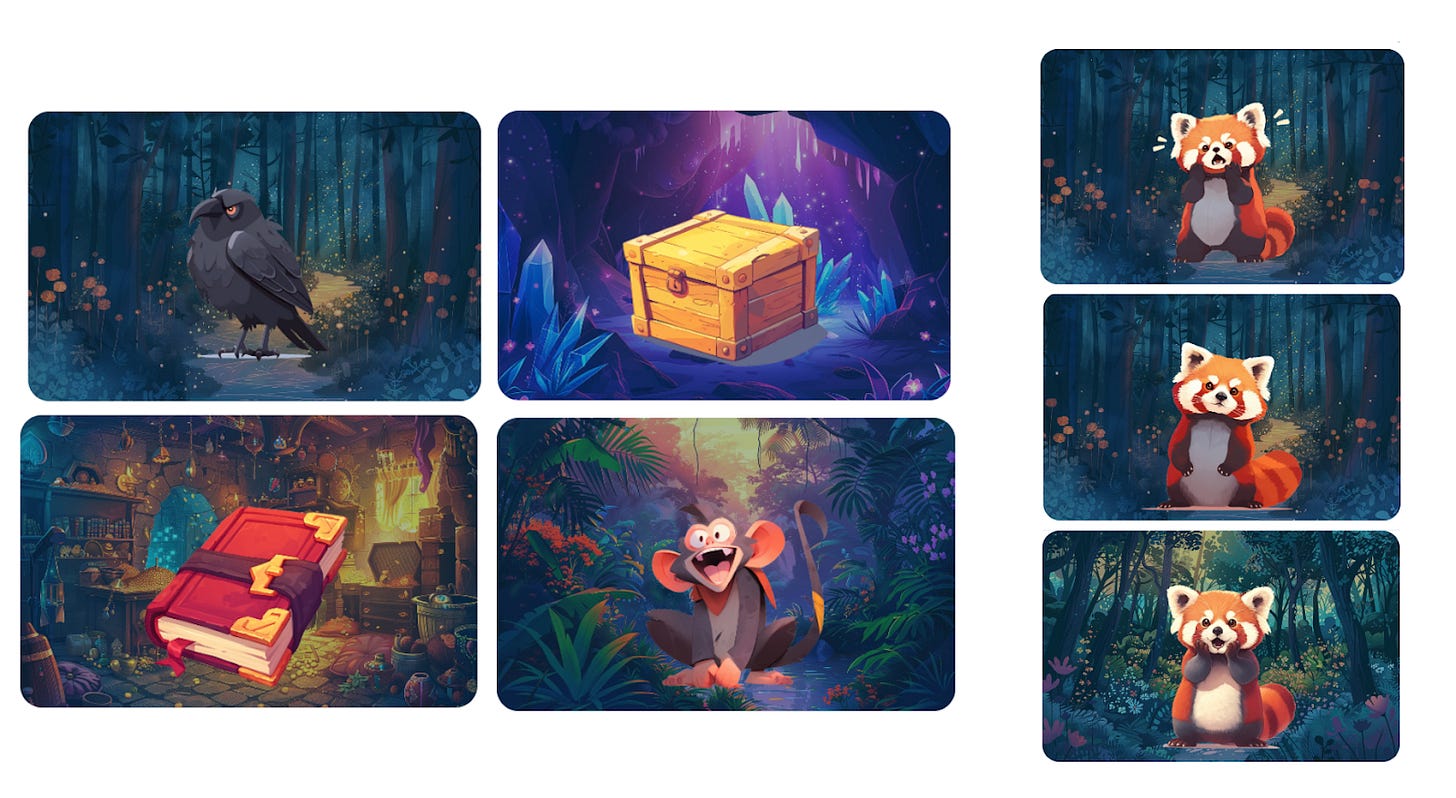

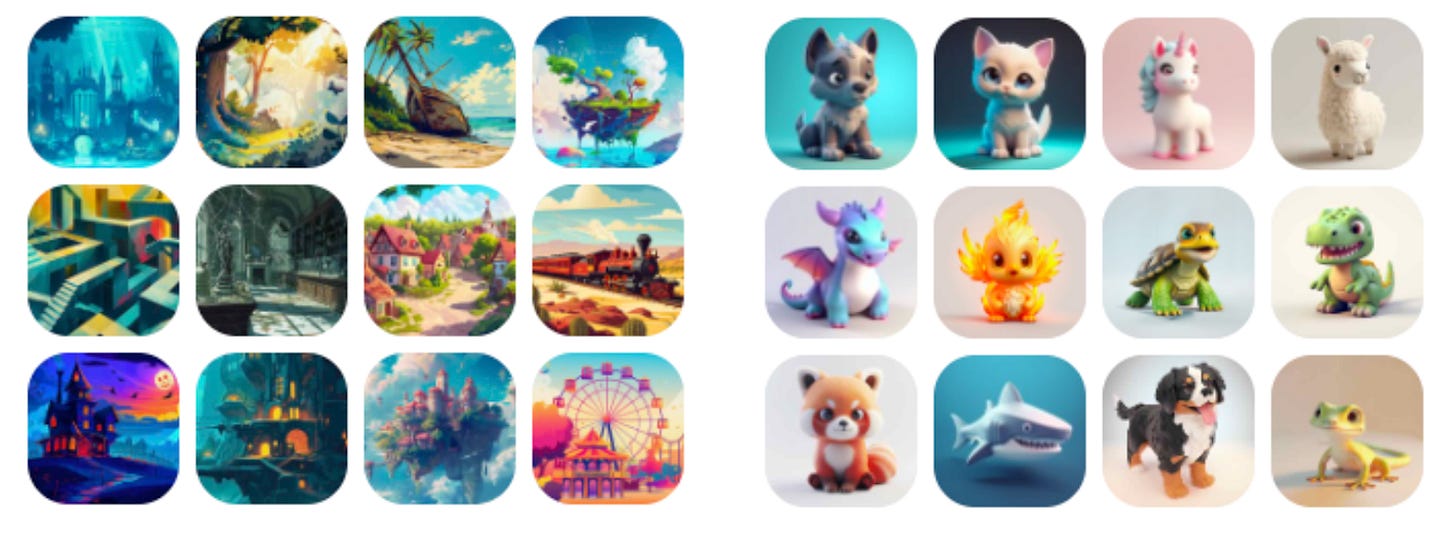

Which brings me back to Wanderly’s latest update. Before this week, Wanderly had about 200-ish AI-generated images of various settings or simple objects (e.g. jungle, space station, crown, ship, etc.) AI was also used to generate our avatar and pet icons:

Although Wanderly images have been a huge source of user delight, they have also been the biggest source of feedback. Keyword matching was primitive and often got things wrong (e.g., a “shark” instead of a “whale shark” or a “boat” that looked like a pirate ship when a child was reading a story about a “leaf boat”). I needed to figure out how to match images semantically to the story.

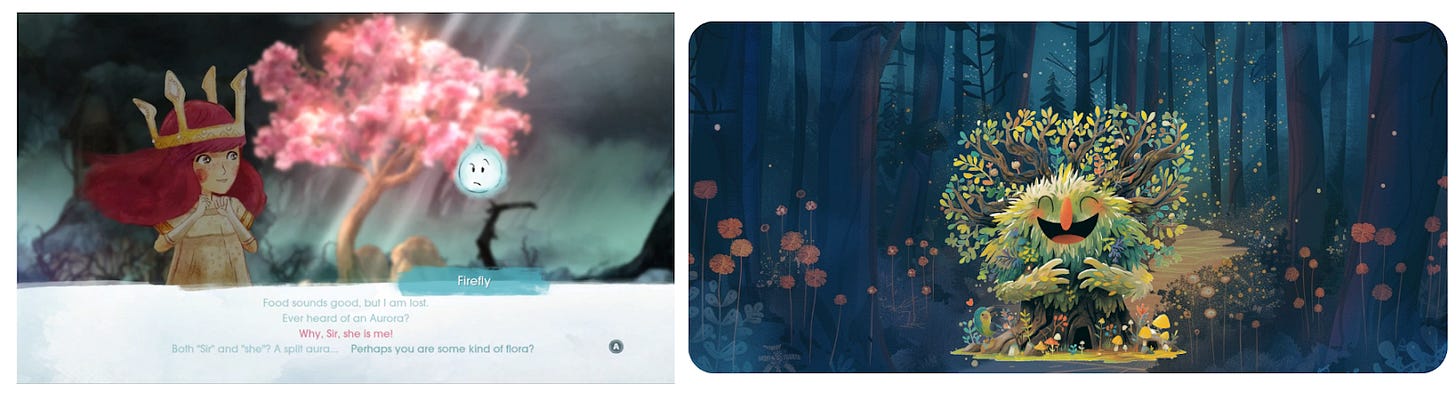

With this update, Wanderly increased the total number of images to 300+ background images and 160+ characters and objects… all matched to stories using LLMs and natural language understanding. This new system also enables foreground images and allows characters to express themselves (generated using Midjourney’s new character reference capabilities). It also mirrors an approach used by many successful video games.

An overlooked aspect of generative AI is that you really only get a certain “budget” of control over the output of these models. If you overspend your budget, you get artifacts, chimeras, or extra things you don’t ask for. It’s not a coincidence that most of the beautiful generative AI demos have simple prompts.

The models just aren’t ready yet to do multiple characters, a contextually relevant background, and character consistency all at the same time, especially not in a way that’s safe for children. So Wanderly’s approach of parent-approved images + dynamic semantic matching allows me to get the best of multiple worlds: contextually relevant, visually consistent, and safe images across multiple contexts and characters. It comes at the cost of coverage, but that can be remedied with time.

It’s not 100% where I want it yet: Coverage and matching aren’t perfect yet, but it’s substantially better than before. And I want to implement multi-character images… but I have a path towards making this all possible within the next few months. 😊

If you’d like to check out the latest update, head over to https://wander.ly. There are character expressions in the gray wolf, lion, and red panda in Help an Animal stories, with more coming soon.

I also had the opportunity to chat with Tiffany Chin from Cocoy.AI about Wanderly, AI, education, and coding. Check it out here.

This isn't my complete list of guidelines, but these are the most problematic in AI images.

My husband recently made "An Explorer's Guide to the Fairy Realm" for my youngest daughter’s birthday. It is very cute, and it was also extra work for him to generate images we felt comfortable sharing with our daughters.

The app is still in the works! More later, but FWIW, I used appicons.ai, and they did a good job tuning their model for app icon applications.

Looks like your making progress!