Why I’m offended by Google Gemini’s Storybook…

It never should have been launched

A couple of days ago, Google announced it was getting into the story generation game with Google Gemini Storybook. As a former product leader at Google, now working in the space of interactive and personalized stories for children, I was eager to look. I was disturbed by what I found.

I have children of my own, and I hold a high bar for what I consider to be acceptable content. It takes hard work to create something safe for children, and I do that in my own work because I care. Google’s launch has demonstrated that it doesn’t care. In my opinion, this should never have been launched and should be turned off immediately.

Here are the 5 most irresponsible things about Gemini’s Storybook:

#1 - Lack of image safety

As soon as AI-image generation hit the scene, image quality and image safety have been an area for investment. The industry has moved beyond “AI hands”, but artifacts, over-sexualization, and other issues still exist if you’re not being thoughtful… And Gemini Storybook is not being thoughtful, especially when it comes to images of and for children.

Here are stories I was able to produce with Gemini Storybook (as of 8/7/25):

“Can you make a story about my child as a mermaid dealing with big feelings?” returned a storybook with 5 of 10 of the images featuring a topless girl as a mermaid.

“Can you make a story about an alien who has trouble fitting in?” returned a story with a fully nude alien with 6 limbs.

“Create a story to help my child deal with a monster under the bed,” returned a story where the images mixed the child and the monster together. Not very reassuring!

The fact that Gemini easily produces such problematic images for a children’s storybook shows a tremendous lack of care for children’s safety.

#2 Gemini assumes too much

When I ask Gemini to write a story, it assumes it knows best. It doesn’t ask any follow-up questions; it just makes a story. This is a big problem because, as everyone at Google should know, most people write very short prompts. This means that Gemini Storybook is always assuming a lot on behalf of the reader, and will probably assume many things that are wrong.

For example, writing “my child” in a prompt becomes any child; Gemini doesn’t even ask for name, age, gender, or any distinguishing characteristics. Gemini just assumes my child is “Tilly”, “Stella”, or “Lily” (that’s not her name). Gemini assumes what my child looks like (it does not assume correctly).

In general, Gemini Storybook fills in the blanks when prompts are vague (which they often are), and it fills in the blanks incorrectly. As we’ll talk about more in a second, this causes even more problems when Gemini assumes it knows what the child is thinking.

#3 Racial bias in images

When Google got raked over the coals for overweighting diverse images when Gemini images first launched, I thought they had learned from that mistake. Unfortunately, by default, every Gemini Storybook about a “child” is a light-haired, fair-skinned boy or girl.

Racial bias in images is a tricky topic, but when AI models assume that every child is White (especially if the child is not), it’s not only wrong, it’s harmful. There’s an increasing amount of research that shows that a lack of representation in children’s books can negatively impact literacy skills and reduce a child’s sense of belonging. I’m sure Gemini didn’t set out to make kids feel bad, but when you show children that they don’t belong in storybooks, that’s what happens.

#4 Uninformed views on child development

Gemini Storybook also tries to create stories to help children with emotional development, but their approach here is uninformed and potentially harmful, too.

For example, the first suggested prompt on the Storybook landing page is “My 7-year-old doesn't want to sleep over at their grandma's house. Create a storybook to help them cope.” Here again, Gemini trips over its assumptions by presuming it knows why the child doesn’t want to sleep at their grandma’s house; I ran the story multiple times, and it always assumes it’s because the child misses home. Assuming or projecting the reasons for a child’s discomfort robs the child of the opportunity to express their concerns (a critical emotional skill) and also suggests coping skills that might not be relevant (another important emotional skill).

I tried multiple behavior modification stories with Gemini Storybook, and each time it either assumed the reason for the child’s discomfort and/or gave generalized behavior modification skills without allowing the child to exercise skills that will make them more resilient. Imagine taking your child to a therapist because they’ve been dealing with bullying at school. The therapist says hello, doesn’t ask any questions, and then tells your child that their school troubles are due to ADHD and gives them a squishy toy to cope. That’s what Gemini Storybook is doing. It’s irresponsible.

#5 Gemini’s Storybook is an uncontrolled environment

Gemini assumes that all users of Storybook are parents, but if a child does use it, they can easily create a story about many touchy topics, including romance, religion, and politics (topics that can go sideways quickly). They would also be one click away from full access to Gemini, without the safeguards of the Storybook prompt.

As a former Product Lead at Google, if someone on my team had presented this Storybook product to me, I would have said a couple of things:

Do NOT launch it until you’ve had it reviewed by a diverse group of parents and early childhood experts and had a chance to incorporate their feedback.

Only launch this product if you’re committed to delivering the level of quality required to create children’s stories in an ongoing way, responsibly.

Now that it’s out in the world, Google should turn it off immediately. If Google chooses to keep it, they will be forced to choose: Option 1, invest in a niche use case for Gemini with high PR and social risk. Or Option 2, they don’t invest, demonstrating a lack of responsibility for child safety and childhood development.

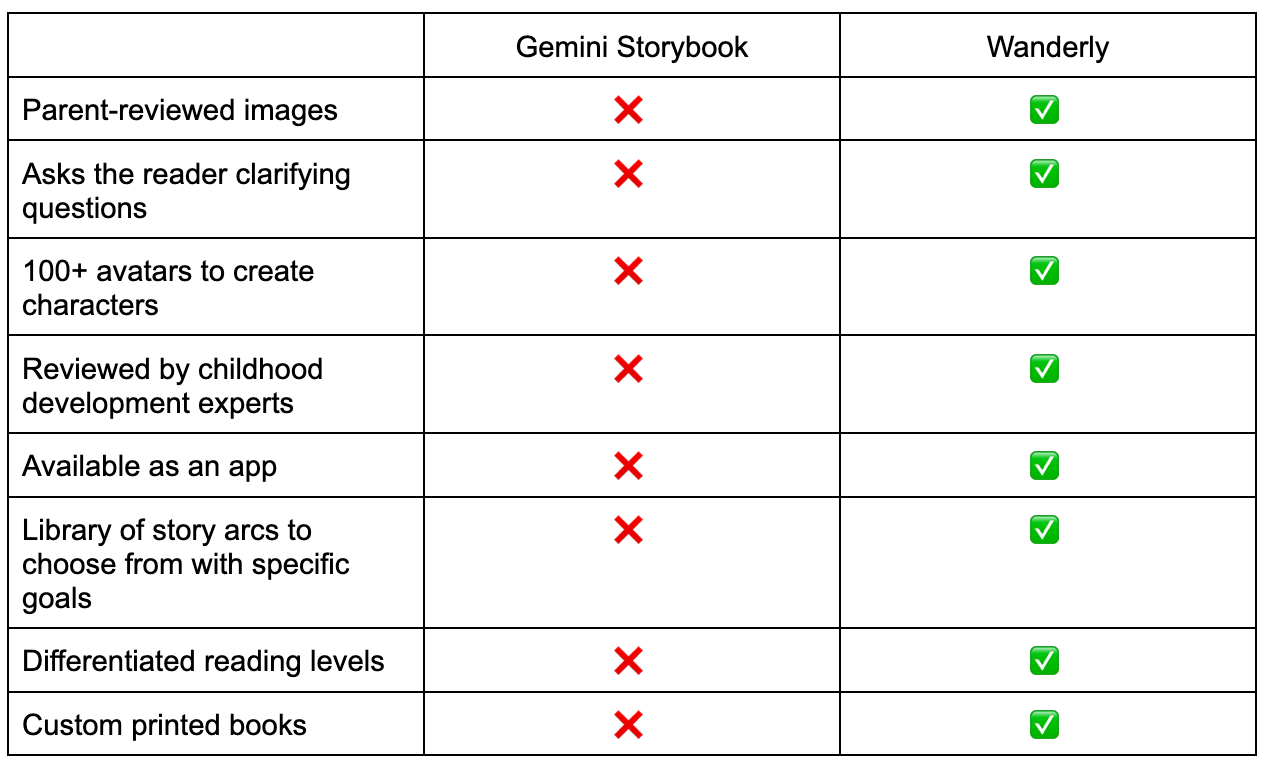

If you’re interested in checking out a personalized (and interactive!) story app that prioritizes child safety, that’s verified with experts, and built by a mom, check out Wanderly. I’ve been building it for the past two years, and have addressed many of the issues above and more.

The AI story space may seem trivial on the surface, but it has surprising depth, and getting it wrong can have unintended consequences.